Intro to Relari

🚀 Welcome to Relari Docs

In the Getting Started section, we will walk through:

- The high-level Philosophy and Concepts behind Relari's data-driven evaluation and improvement toolkit.

- How to use the Relari Cloud UI to generate datasets, run experiments, and auto-improve your LLM applications.

For specific references and code examples, please visit the Python SDK & CLI, REST API and Metrics sections.

🎯 Purpose

LLMs are incredibly powerful but tricky to work with because they are non-deterministic. This often means LLM applications get stuck in the alpha/beta phases, with no clear path to true production, especially for mission-critical applications.

To get to production, AI developers have tons of options to sift through—different models, prompting strategies, RAG architectures, agent setups, and more. The best choice often depends on the specific use case. For example, a customer support chatbot vs. a legal copilot will likely need very different system design.With so many options, it's hard to figure out the right path forward.

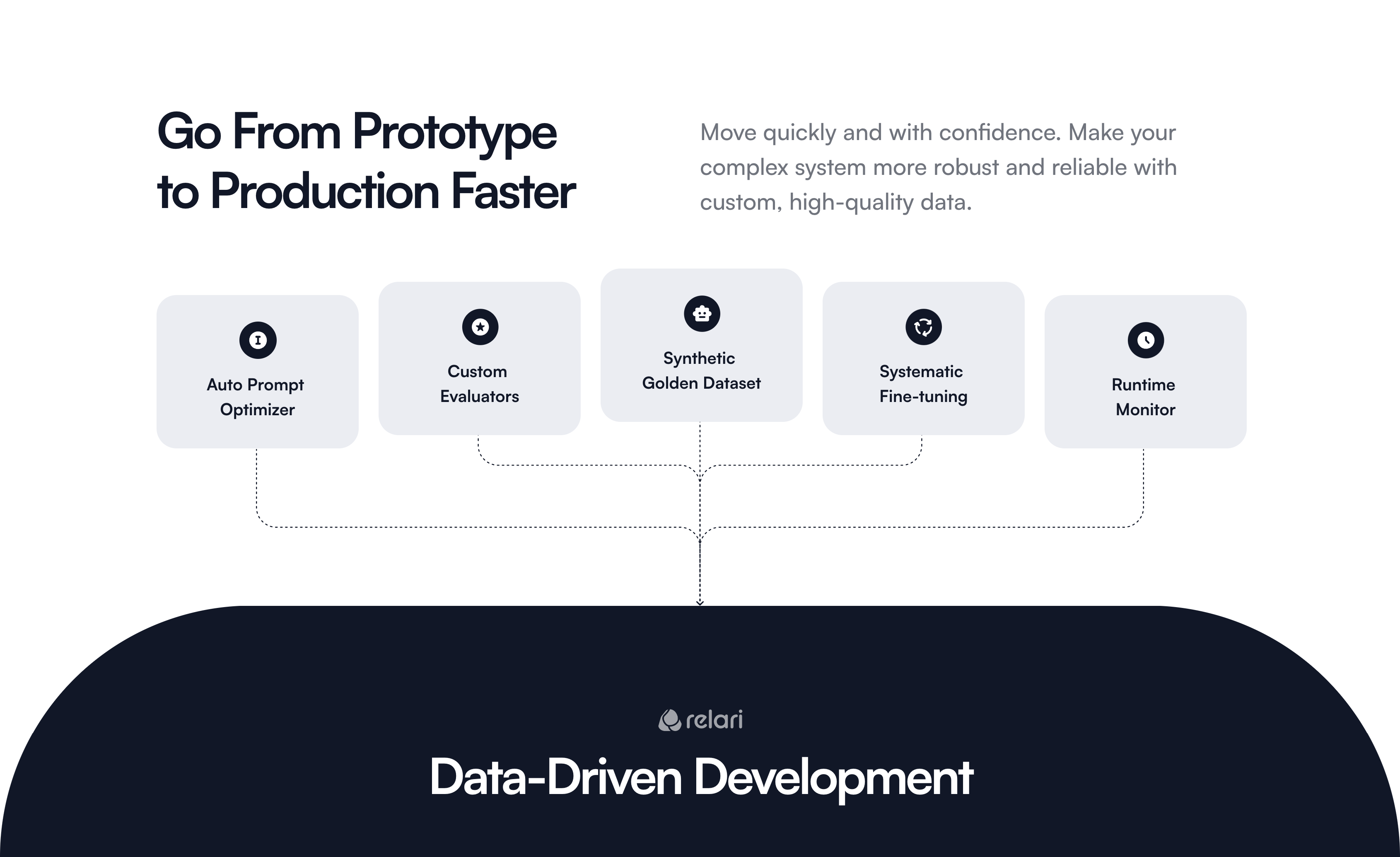

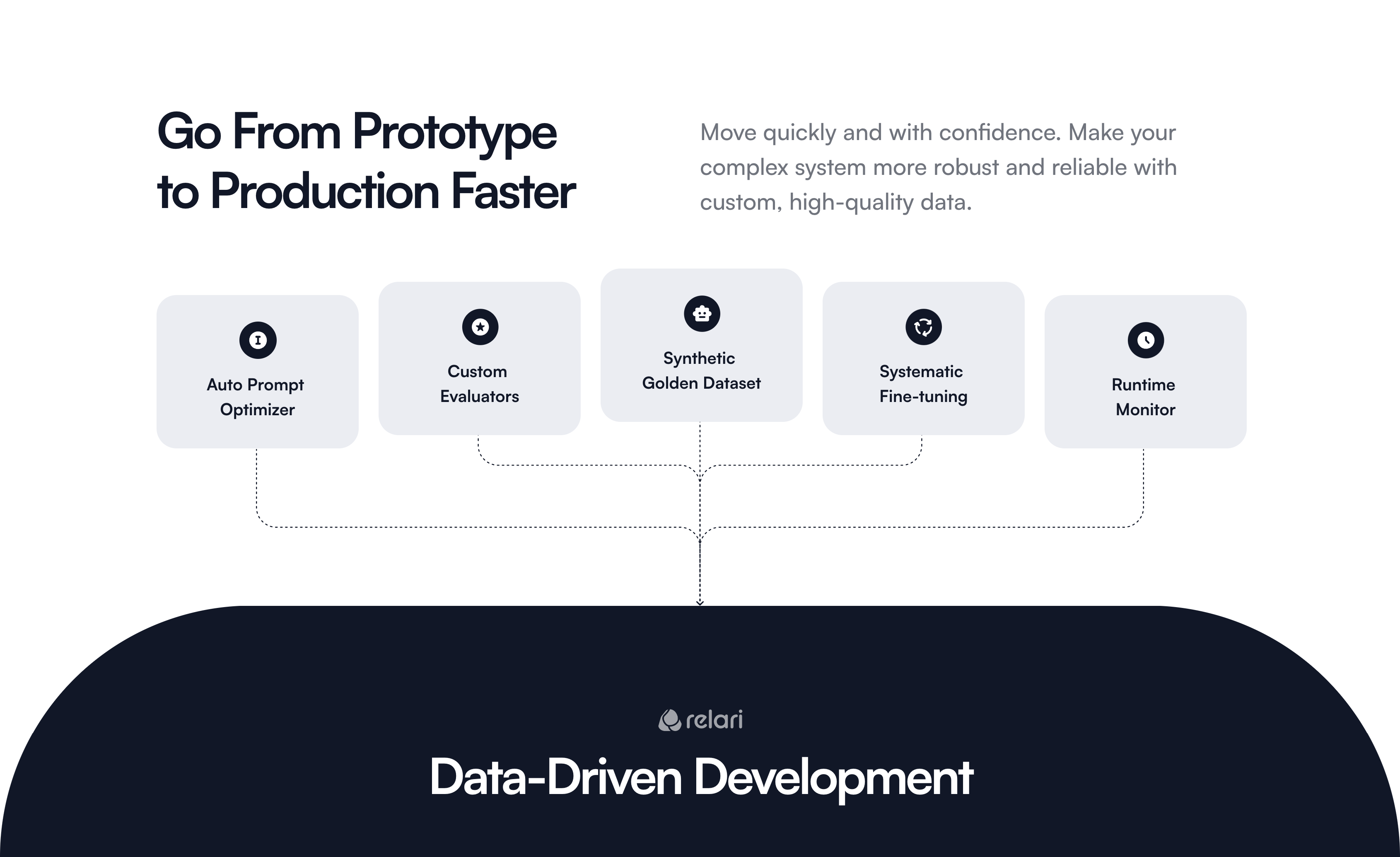

Relari is here to take the guesswork out of this process. We use a data-driven approach to help AI teams systematically make these design choices and get from demo to production faster.

📊 Why Data-Driven Approach?

Data can be your secret weapon to make your AI app stand out. Leveraging use-case-specific data helps you understand your app's performance better, automate parts of the iteration process, and make your application more reliable.

Using data throughout your development—from early experiments to after launch—lets you make confident decisions and boosts user satisfaction.

How Relari Helps AI Teams:

- Rigorous Evaluation and Benchmarking: Use comprehensive, tailored metrics to measure and compare performance.

- Golden Dataset Generation: Curate high-quality datasets from human labels or synthetically generate at scale.

- Automated Prompt Optimization: Automatically optimize prompts to capture nuanced requirements.

- Systematic LLM Fine-Tuning: Take the guesswork out of the fine-tuning domain-specific LLMs to outperform large models.

- Run-time Monitoring: Monitor your LLM app's performance in production and use data to inform adjustments.

🔍 Background

Relari started as an open-source LLM evaluation framework continuous-eval, offering 30+ metrics covering various applications. As we iterate with continuous feedback from the developer community, we realized that AI builders need much more than standard metrics and there's a lot more potential we can help unlock by building the comprehensive toolkit to enable the data flywheel in LLM products.

Whether you're already using continuous-eval or just starting with Relari Cloud, we're excited to help you build better LLM applications faster. We look forward to hearing your feedback as we shape this new category of data-driven development infrastructure.